Author's note. This article was originally published in SunWorld's July 1999 issue. SunWorld.com no longer exists and redirects the browser to ITworld. This copy of the article is a copy of the article that was on SunWorld's site.

There is a copy of the original article on another site, but it's not clear how long this copy will stay there.

Among the benefits of having a real-time monitoring system for your computer and networking equipment are easy identification of short-term problems, understanding the usage patterns of your systems and recording the data for long-term capacity planning. This article discusses the issues, challenges, requirements and steps to install your own real-time monitoring system.

All computer networks grow and expand as users take on new projects that push network and computer hardware to their limits and additional hardware and software is needed to meet the user's needs. In addition to this long term issue of capacity planning are short-term issues related to problem identification and resolution. Typically, both capacity planning and problem identification are based on the same set of data. For example, in heavily used web servers, Sun systems can drop packets inside the kernel (the nocanput parameter from netstat -k output). In the short term, identification of this problem is clearly important in the performance of your web server. The long term trend of number of packets dropped per second may indicate more servers are needed. While there exist many short term monitoring tools, such as perfmeter and top, which can be used to monitor systems on a second by second basis, this article will focus on monitoring systems on both a short- and long-term basis, which excludes many of these tools.

Designing a real-time monitoring system present several challenges. The first one is managing large amounts of data; the data may be stored for many months to a year for long-term capacity planning. A second challenge is in determining how to make the data easily viewable.

I designed a real-time monitoring system for GeoCities based on the following requirements:

The ability to monitor many systems.

Measure and display short (daily) and long-term (yearly) trends.

Allow easy comparison of the same type of measurement between different systems.

Allow easy viewing of all system measurements on different time scales.

Plots are always up to date and always available.

The act of measuring a system should not adversely affect it, i.e. by placing a large additional load on it, impacting the TCP stack throughput, etc.

Since each site has its own requirements and problems, the best real-time monitoring solutions are those that allow easy customization of visualization options, the type of data to record, etc. Having access to the source code always helps in these matters as does designing for flexibility.

For Sun SPARC or x86 Solaris based monitoring, a freely available solution that meets the above requirements is a collection of tools that include the following:

The SE toolkit from Adrian Cockcroft and Rich Pettit, a heavily modified version of SE's percollator.se script called orcallator.se for collecting system data.

The Round Robin Database (RRD) library written by Tobias Oetiker (who also wrote the Multi Router Traffic Grapher - MRTG) for binary data storage and plot generation in the form of GIFs.

Orca, a Perl program I wrote that manages reads the data collected by orcalltor.se, creates an HTML tree and uses RRD to generate GIF plots.

Orca requires the installation of the SE toolkit and the orcallator.se program on each monitored system. The SE toolkit contains a program se, that like Perl or the Bourne shell, reads and executes scripts written in a C like language. SE's unique feature is its easy access to kernel data structures. This makes it popular for measuring, processing, and reporting such data.

Orcallator.se is a program written in the SE language and is a heavily modified version of the original SE percollator.se program. Its improvements include the recording of many more system parameters. In fact, almost any measurement made in the SE examples/zoom.se script is recorded by orcallator.se. It also allows users to easily choose which portions of the system are to be measured. Orcallator.se is designed so that it can measure each of the following subsystems: CPU, mutex contention, network interface cards, TCP stack, NFS, disk IO usage, the directory name lookup cache (DNLC), inode cache, RAM, page usage. Additionally, orcallator.se can process NCSA and Squid style logs to generate statistics related to measure the amount of traffic the web server receives, such as hit rate, bytes sent per second, etc. Orcallator.se appends its measurements in a single line to a text file every five minutes for later processing and viewing. For a proper Orca installation, all hosts being monitored should mount a common NFS shared directory and write orcallator.se's output there. For a good description of how orcallator.se's and its predecessor percollator.se work see

http://sunsite.uakom.sk/sunworldonline/swol-03-1996/swol-03-perf.html

Orca makes use of a library written by Tobias Oetiker that provides a Round Robin Database (RRD). For users of Multi Router Traffic Grapher (MRTG), RRD will prove familiar. RRD provides a flexible binary format for the storage of numerical data measured over time.

An arbitrary number of different data streams are pushed into a RRD file and passed through an arbitrary number of consolidation functions and then permanently stored. So a single input data stream may result in several different consolidated streams inside the RRD file. Consolidation is the feature where an arbitrary number of measured data points are consolidated into a single data point. Available consolidation functions are the minimum, maximum and average. For example, six data points measured 5 minutes apart may be consolidated into a single 30 minute data point using either the average, minimum, or maximum of the six data points. The 30 minute consolidated data points may be used when plotting longer term, such as monthly data, but a separate consolidated stream consisting of 1 input data point may be used in plotting a day long view of the data.

Consolidation of input data provides long-term data storage in a reduced amount of disk space. The consolidated data is used when Orca plots longer term, such as the yearly plots of data. This feature provides one of the key features of RRD: the binary data files do not grow over time. Upon creation of an RRD data file, the user specifies how long a particular data point in a consolidated data stream will remain in the file. In Orca's case, 5-minute data is kept for 200 hours, 30 minute averaged data is kept for 31 days, 2 hour averages data is kept for 100 days and daily averaged data for 3 years. Such a data file is 50 Kbytes long. The last part of RRD is that it reads an arbitrary number of RRD files and generates GIF plots.

Orca is a Perl script that reads a configuration file instructing where its input text data files are located, the general format of the input data files, where its RRD data files should be located and the root of the HTML tree to generate. In the HTML tree is a root document in index.html listing each host and separately each different measurement made. Clicking on a link takes the viewer to a page showing actual plots. Plots will either show a daily, weekly, monthly, or yearly view of the data in question. Orca allows easy comparison of the same measurement on different systems by listing all the same measurements on a single web page.

In its normal mode Orca runs continuously, sleeping until new data is placed by orcallator.se into the output data files. Once new data is written to a file by orcallator.se, Orca updates the RRD data files and any GIFs that need to be updated are recreated.

Using Orca has the following advantages:

It is freely available.

Access to the source code, allowing modification and addition of parameters used to measure a particular site.

Orcallator.se runs as a single process on each monitored system and does not fork off any processes; hence it puts a minimal load on the system.

Orca can manage almost any text data file.

The only disadvantage to this solution is that the data collection portion, orcallator.se and the SE toolkit, is only available on SPARC and x86 Solaris platforms. Since Orca and RRD are platform independent, they can be used on any system, but a new data collection tool would have to be designed.

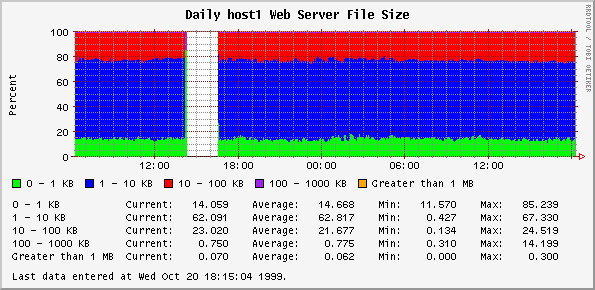

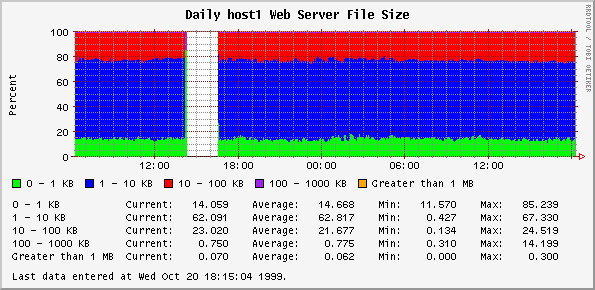

As an exmaple of the output generated by Orca, these four plots show the daily, weekly, monthly, and yearly CPU utilization of one of our servers. Here, green represents the percentage of CPU time spent executing user code, blue represents the percentage of CPU time executing system code and red represents the percentage of CPU time when the CPU is idle.

A more complete example showing the comparison capabilities of Orca is located at http://www.orcaware.com/orca/orca-example/.

If the above solution is not applicable to your environment, then a custom real-time monitoring system must be designed. The first step in designing your system is determining the scope and requirements of the project. Answer the following questions: What are you measuring and for what purpose? How will the data be visualized? How quickly will the plots be generated when a new graph is requested from the system (continuous graph update versus user requested graph creation)? What tools will you have at your disposal? How long will you need to keep the recorded data around? And finally, do you have the resources (people, time and money) to create the system?

A real-time monitoring system involves several components. The first is the data collection tools. These may be programs that come with the operating system such as sar or netstat, freely available programs such as top or the SE toolkit, or commercial programs. The second is a data storage system. This may be as simple as using flat text files with each measurement on a separate line or as complicated as a real database. The data collection tools must be able to efficiently interface with the data storage system. The next step is determining what will gather and collate the input data into some groupings that make sense for presentation. What language will this be written in? This program must be able to interface with the data storage system as well as the next key component, the data visualization tools. Finally, how will your data be visualized? Do you want to view it in a web browser or something more powerful, such as a Java program that allows arbitrary groupings of data? Do you want a CGI script to create the graphs or have the graphs continuously updated? Personally, I dislike waiting for a CGI to generate an HTML page and the graph GIFs. It reduces the tendency for people to examine the data to see what may be wrong with the system and makes ad-hoc analysis more time consuming.

Before writing any code, see what tools and packages you can leverage to reduce the creation time of your system. My mantra is: do not reinvent the wheel, but feel free to improve it. I have always found that other authors are happy to accept additions and improvements to their code.

There are some things to watch out for in designing your own real-time monitoring system. The first is scalability as the number of monitored systems increases. What happens to the system as the number of hosts increases from 10 to 100? The initial Orca worked well with 10 hosts, but internal data structures ballooned when monitoring 100 hosts. The system should also be fast, since it is real-time. If the two previous issues are not that important, then the following one should be. The measuring tools should be as lightweight as possible on a running system. Ideally, create a tool that does not fork off any processes and does not need to be invoked via cron. Any tool that spawns other processes will impact the measurements it is making. If you have a Bourne or C-shell script that forks many processes to make measurements or process output from other programs, consider rewriting the code in Perl or SE if possible to reduce the number of additional processes on the system.

Orca home page

http://www.orcaware.com/orca/

Orca example HTML tree

http://www.orcaware.com/orca/orca-example/

SE toolkit home page

http://www.setoolkit.com/

RRD home page

http://people.ethz.ch/~oetiker/webtools/rrdtool/

Top FTP site

ftp://ftp.groupsys.com/pub/top/